Aviation English Testing: TEAC Interlocutor Training

Scenario-Based e-Learning

This example project show-cases some of my skills in designing e-learning solutions. TEAC is an internationally-used language proficiency test, and its examiners require standardisation training to ensure they are all obtaining the best English language sample from their candidates.

The aim of this training is to remind them of important ideas in test delivery.

fdfdrere

Overview

AUDIENCE: Recently qualified Aviation English test examiners.

MY ROLES: Action-mapping, storyboarding, visual element design, storyboarding, prototyping, Instructional Design, eLearning design.

TOOLS: MindMeister, Adobe XD, Adobe Illustrator, Articulate Storyline

The Test of English for Aeronautical Communication (TEAC) serves as an internationally recognized language assessment for aviation professionals, including pilots and air traffic controllers. This human-to-human interaction test aims to evaluate candidates’ English proficiency in alignment with the ICAO Language Proficiency Requirements.

A key component of training TEAC examiners involves the utilization of Extension Questions – designed to prompt candidates to provide additional examples of their language use for assessment purposes.

However, the implementation of Extension Questions varies among examiners, posing a potential threat to test reliability. The inconsistency in examiner practices raises concerns about fairness in testing, as performance outcomes can be influenced significantly by the specific examiner assigned to a candidate.

Recognizing the potential impact on fairness, it is imperative for the testing organization to ensure examiners receive periodic supplementary training, specifically focusing on refining their approach to Extension Questions. Consequently, the testing organization expressed a strong interest in exploring metrics that can enhance and standardize the use of Extension Questions, thereby improving overall test reliability.

Method

Drawing on my extensive experience as an examiner, I integrated my insights into the process of seeking Subject Matter Expert (SME) assistance with designing the training content. Collaborating with numerous colleagues, we collectively gathered instances highlighting suboptimal examiner techniques that led to diminished test performance.

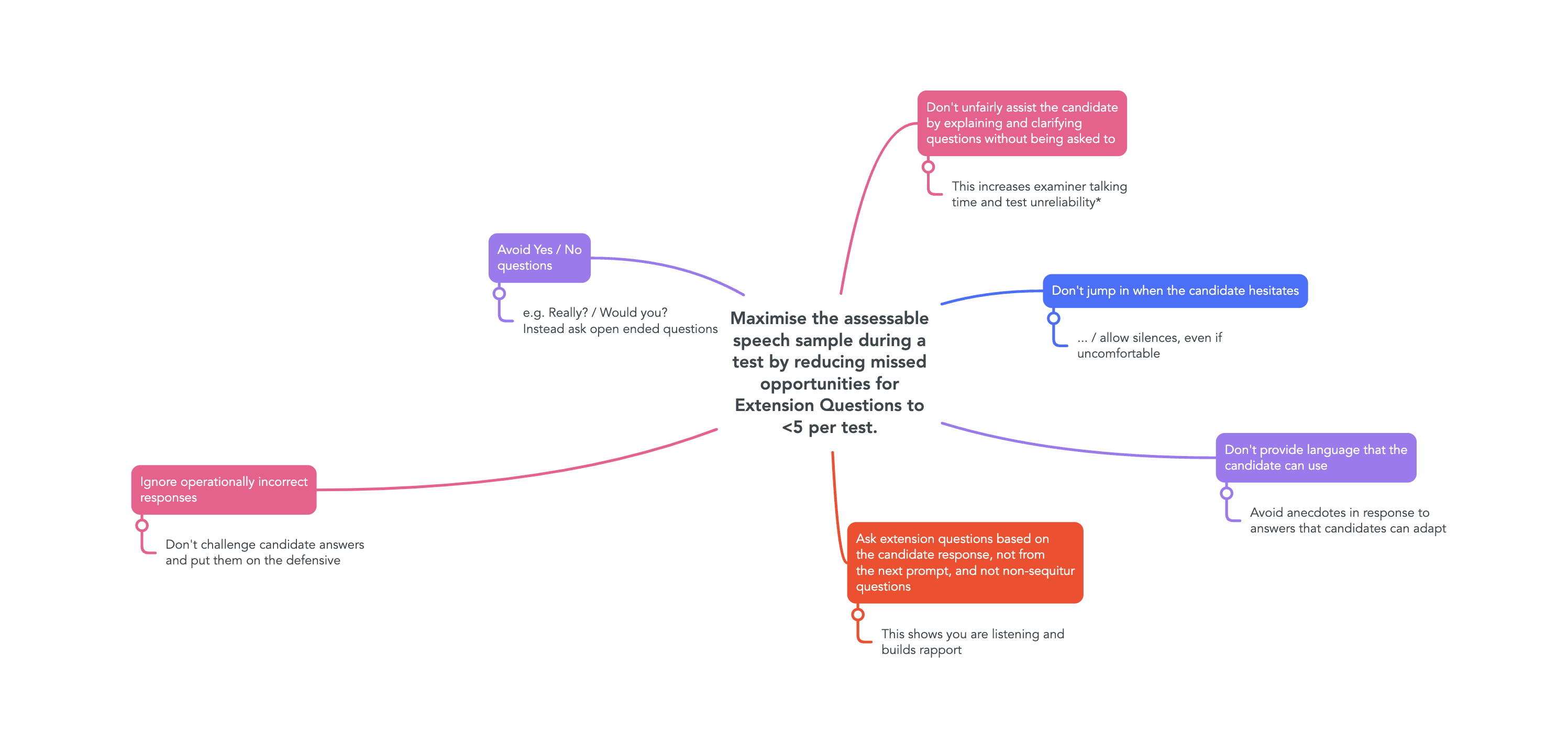

The primary objective of our training initiative was to mitigate instances of missed opportunities for Extension Questions posed by examiners. The language test’s overarching goal is to elicit a comprehensive language response, with a crucial aspect being the strategic use of Extension Questions—queries that build upon the candidate’s language.

Not all Extension Questions are created equal; some are more effective than others. The metric employed to evaluate Extension Question quality centered on the amount of additional language prompted from the candidate. For instance, closed questions demanding a simple Yes/No answer were identified as likely to yield minimal additional language. More nuanced are those questions that introduce subtle affective challenges in the examiner-candidate interaction.

Our targeted objective for examiners was to minimize instances of missed Extension Question opportunities to fewer than five per test. This involved directing examiner attention toward common situations where they or their peers often overlooked such opportunities during the language test.

To identify specific scenarios, I engaged with SME examiners to share instances in which poorly formulated Extension Questions resulted in reduced candidate output. Subsequently, I categorized these instances into five distinct “categories” of misjudged Extension Questions, serving as exemplary cases for our training.

During our collaborative Teams meeting, we delved into this phenomenon, with examiners providing examples of missed opportunities derived from personal experiences and observations of other tests. From these discussions, we constructed a MindMeister chart outlining scenarios framed as both “Do”s and “Don’t”s, accompanied by a rationale.

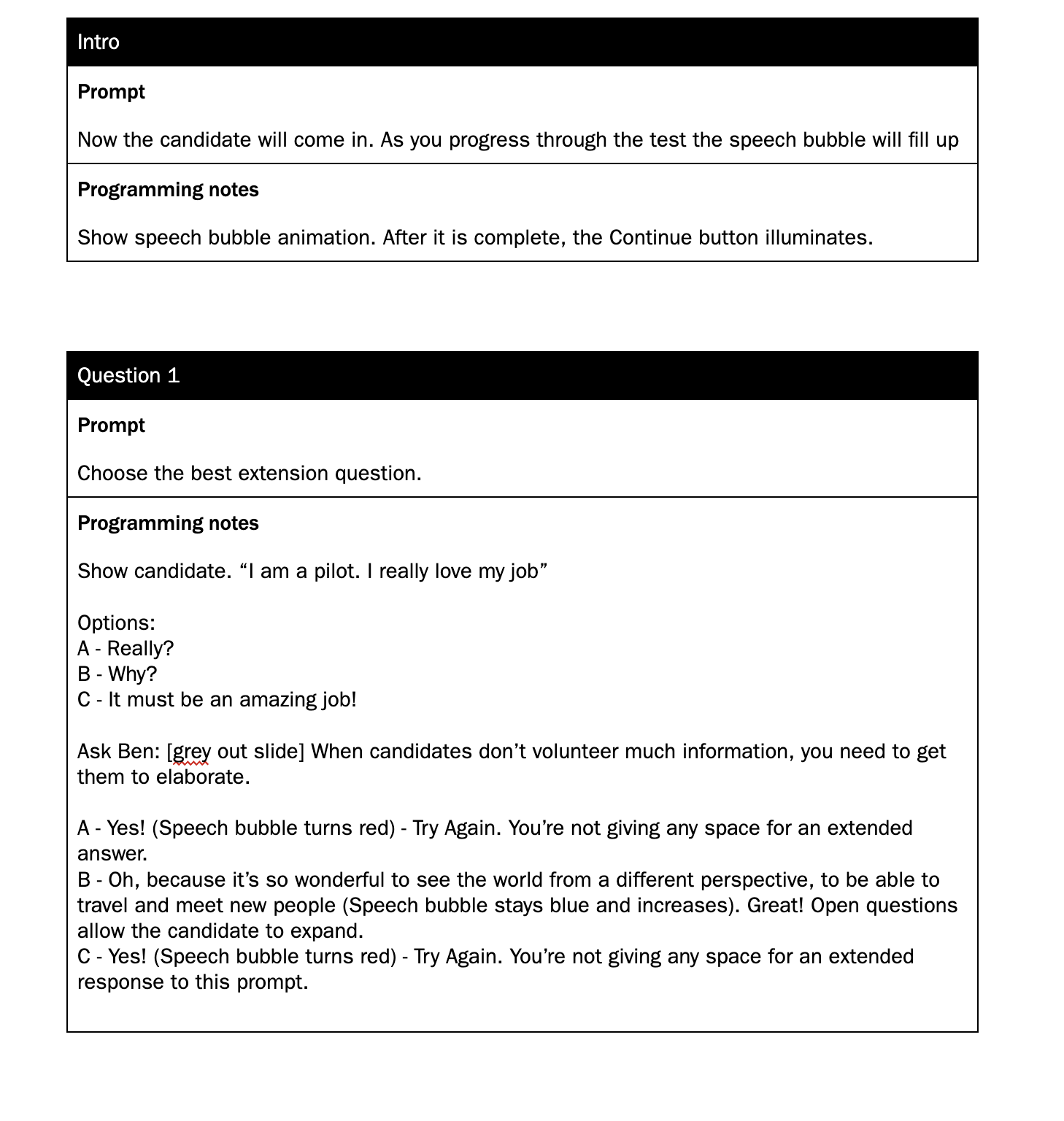

Storyboarding

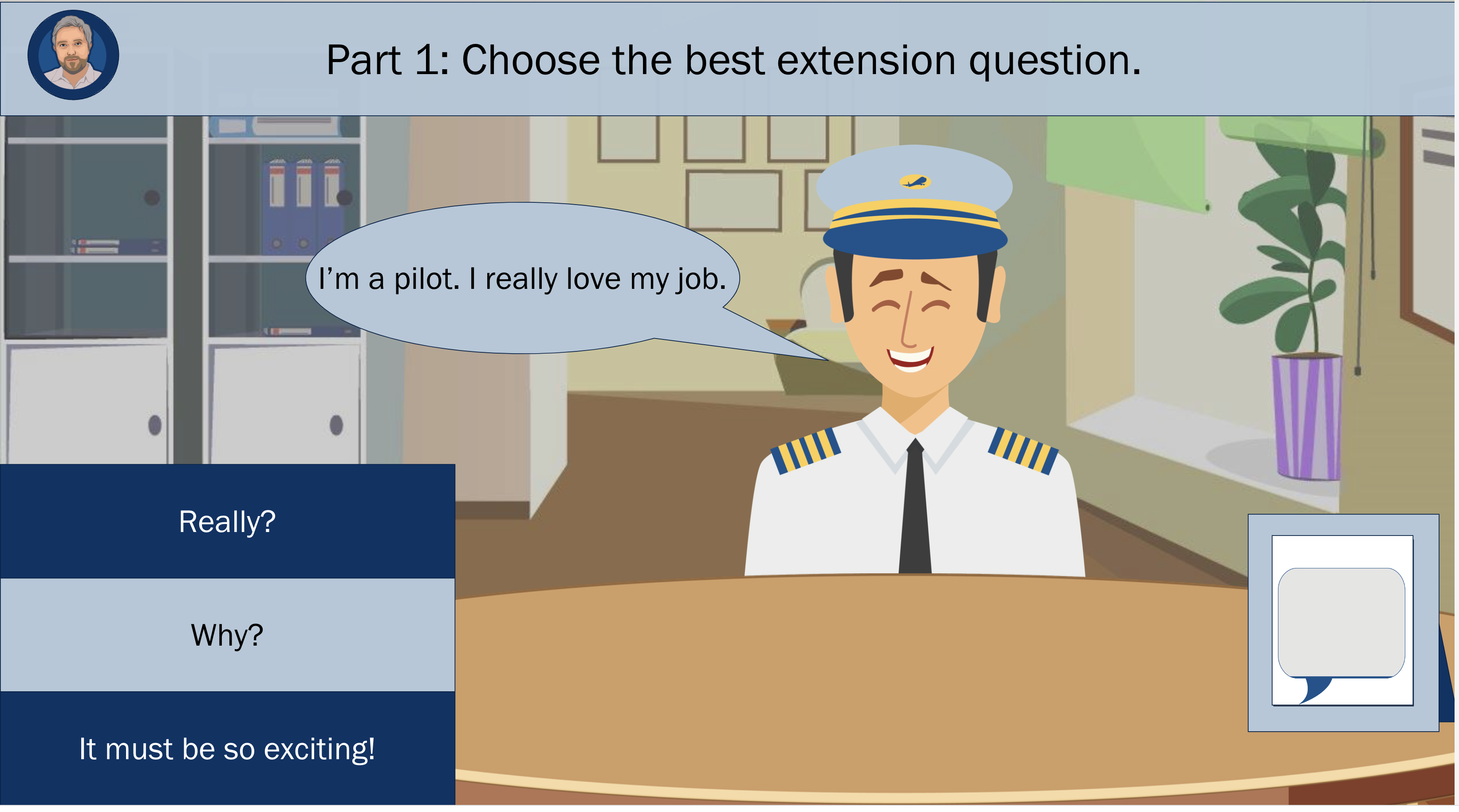

Having amassed a substantial collection of examiner “Do”s and “Don’t”s, we proceeded to craft scenarios suitable for integration into a visual, scenario-based e-learning narrative. The concept involved immersing the user in the role of an examiner conducting a language test with a pilot candidate, utilizing the identified situations from the MindMeister ideas.

We carefully selected five scenarios to showcase a diverse range of conversational contexts. For each scenario, a detailed script was developed, encompassing:

1. Candidate Utterance:

- A statement or response from the pilot candidate during the test.

2. Examiner Options for Extension Questions:

- Three choices provided for the examiner, with one being the correct option and the other two likely to yield limited assessable language.

3. Responses to the User:

- An explanation accompanying each option, elucidating why the Extension Question was deemed suitable or unsuitable.

4. Additional Help from the Avatar:

- Guidance that the user (examiner) could seek from a Help Avatar, represented by a cartoon depiction of a seasoned senior examiner familiar to all participants.

These components were drafted as a series of programming notes. This approach facilitated the structured development of the eventual Storyline object by planning the context and content of each slide before advancing to the visual design stage. The programming notes served as a blueprint, ensuring a coherent and effective progression in both narrative and educational content throughout the e-learning experience.

Visual Design

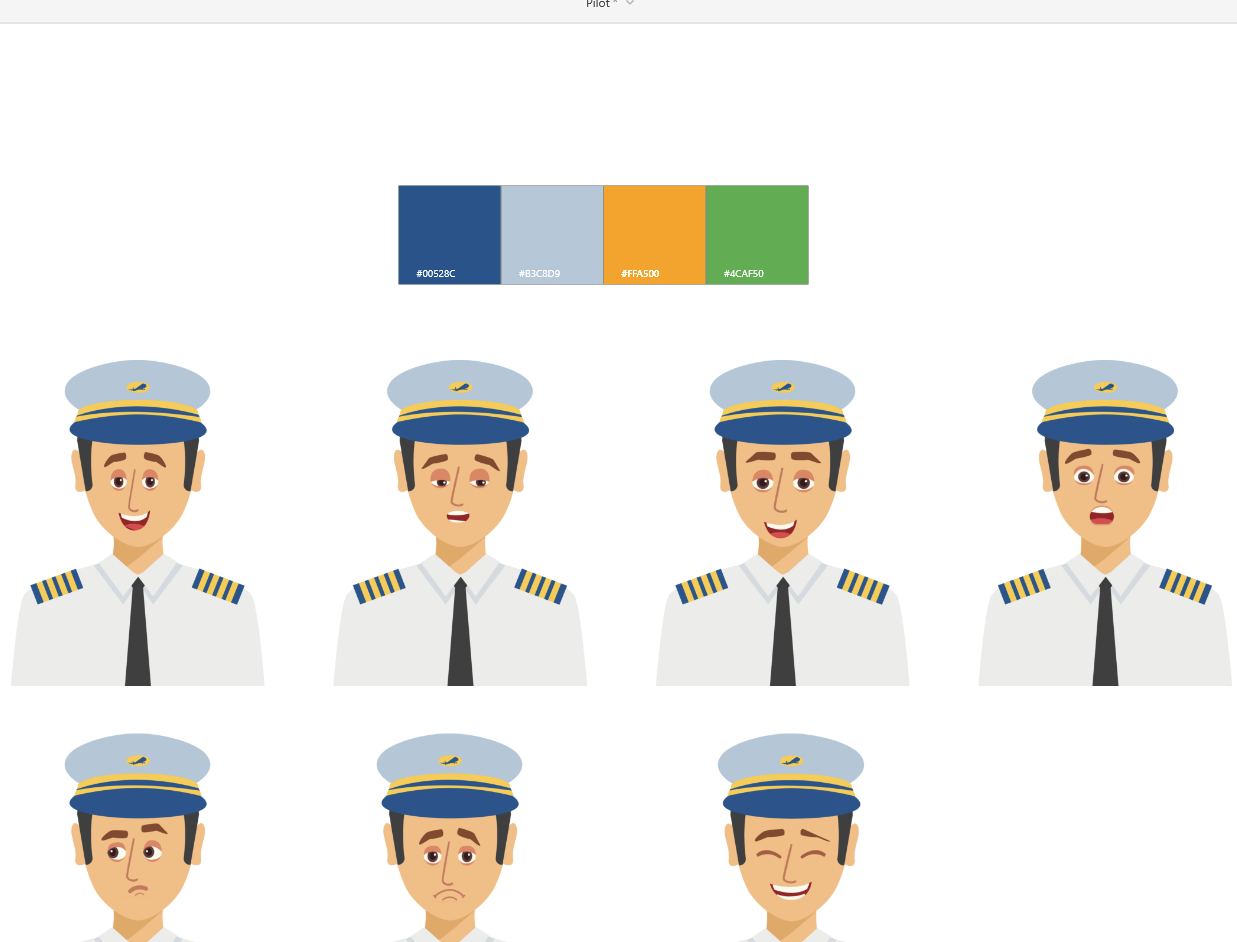

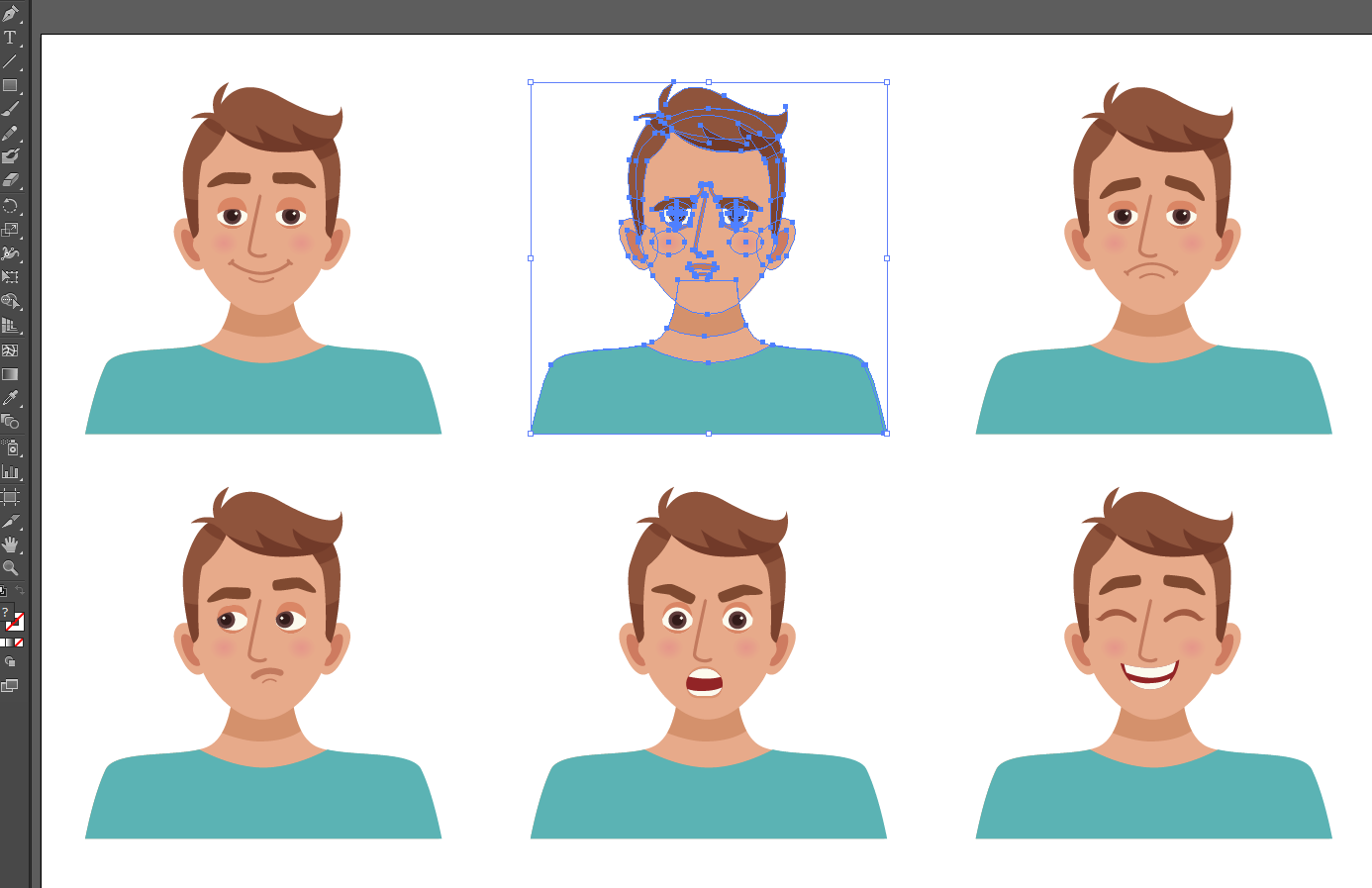

Expressive Avatar for the Candidate:

- Recognizing the importance of creating a realistic examination scenario, a bespoke approach was taken to develop an expressive avatar for the pilot candidate. Although limited pre-existing pilot-themed avatars were available, a resourceful solution was found. A blank-faced pilot vector image was obtained, and various vector image expressions were integrated using Adobe Illustrator. This innovative combination resulted in a set of expressive visuals for the pilot candidate, enhancing the authenticity of the simulation.

Consistent Colour Theme:

- To maintain visual coherence and align with the company’s brand identity, the colour theme was selected based on existing brand colours. This consistency not only reinforced the brand but also contributed to a seamless and professional visual experience for users.

The iterative design process involved creating sample slides in Adobe XD, allowing for collaborative feedback from the team. This visual prototype served as a preview, ensuring that the envisioned design met the project’s objectives and effectively conveyed the training content.

Subsequently, the approved layouts were replicated in Articulate Storyline, and the pre-written storyboards were implemented. This methodical approach ensured a smooth transition from conceptualization to execution, resulting in a visually compelling and pedagogically effective e-learning experience for examiners.

Features

The e-learning produced as a result of this process has a number of noteworthy features:

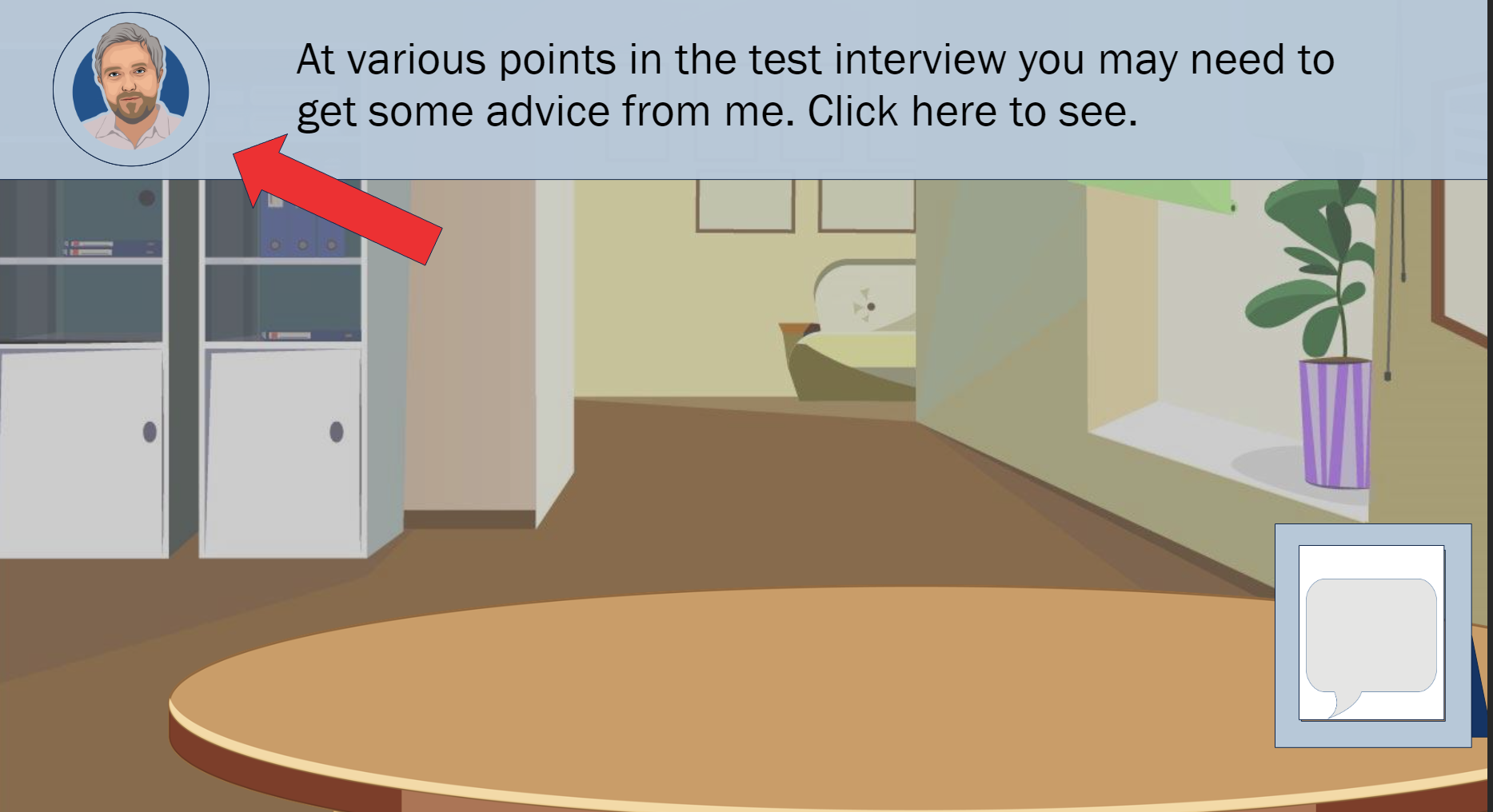

GAMIFICATION – SPEECH BUBBLE

To add a touch of fun to the training, a speech bubble placed on the examiner’s desk undergoes dynamic changes. Not only does it alter in colour, but it also expands in size as the user advances through the training. This subtle yet engaging feature serves as a lighthearted progress indicator, enhancing the overall user experience.

PRESCRIPTIVE FEEDBACK – MENTOR BEN

Introducing a mentor character named Ben, based on the familiar face of the TEAC senior examiner, offers users a valuable resource for guidance. By interacting with Mentor Ben, users can access tailored advice relevant to the specific scenario. The advice is presented in a conversational tone akin to face-to-face training situations, fostering a sense of familiarity and ease of understanding.

AUDIO FEEDBACK

Positive and negative feedback is reinforced through the integration of sound effects. This audio element adds an extra layer to the learning experience, providing immediate and auditory cues to highlight the correctness or shortcomings of the user’s actions.

VISUAL FEEDBACK

The candidate’s facial expressions play a pivotal role in offering meaningful feedback to the user regarding the suitability of their Extension Questions. Through animations and color changes, success or lack thereof in selecting appropriate Extension Questions is visually conveyed. This dual feedback mechanism, combining visual and auditory elements, enriches the learning process by offering multiple channels for user comprehension.

By integrating these features, the e-learning module not only imparts valuable content but also prioritizes user engagement and comprehension through elements of gamification, prescriptive feedback, and multi-sensory reinforcement.

Lessons Learned

Initial trials revealed that the e-learning format significantly outperformed the previous PDF-based training in terms of memorability and engagement. However, for future iterations, there is room for improvement.

- Pathways:

- To elevate engagement and replayability, consider implementing a more intricate branching pathway system. Instead of redirecting users to the original prompt, this approach would create a dynamic environment where users aim to achieve the highest score possible. Restricting the ability to replay scenarios adds a layer of challenge and encourages users to navigate through a variety of situations. This could be further enhanced with the inclusion of additional scenarios, providing a more diversified learning experience.

- Additional Situations:

- Explore the possibility of incorporating a pool of questions or modifying and expanding existing question types. This adaptation would allow the training to be reused for annual standardization purposes, ensuring that the content remains relevant and provides ongoing value for examiners. By introducing new situations or refining existing ones, the training can continue to evolve, addressing the dynamic nature of language testing in aviation.

By incorporating these insights into future iterations, the training can continue to evolve, maintaining high levels of engagement and effectiveness while adapting to the changing needs of the testing environment.

Other Projects

Here are some other work that showcase some of my abilities using e-learning tools:

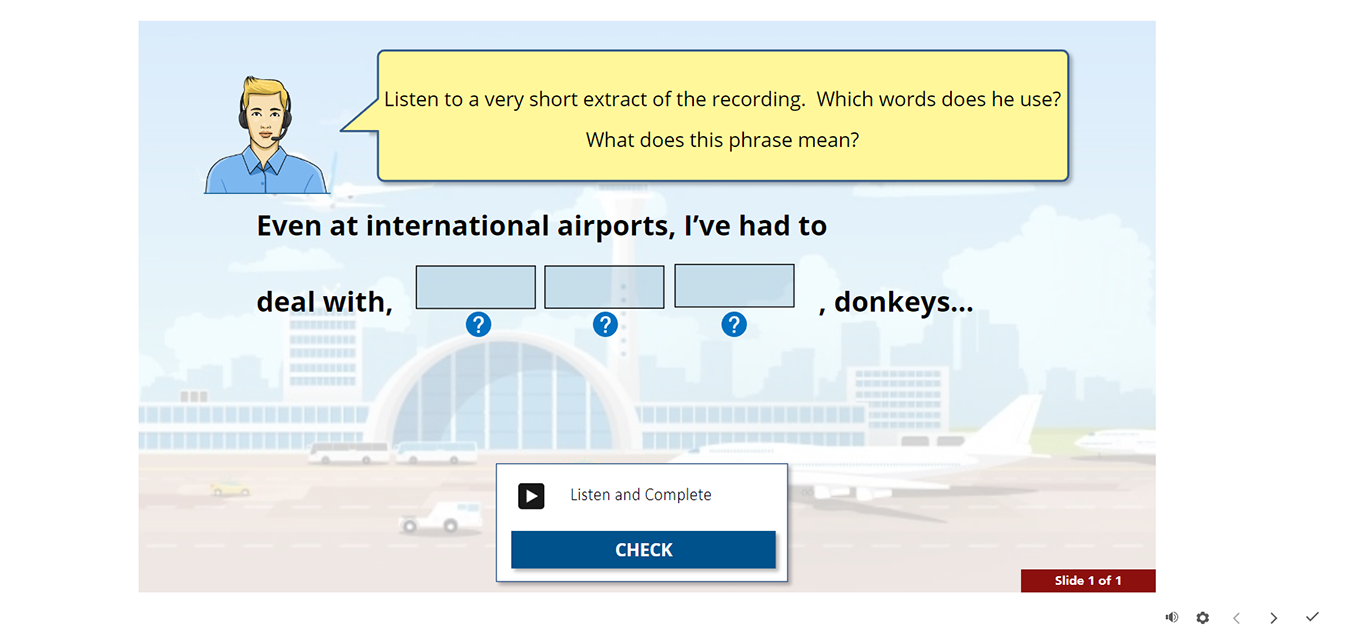

Aviation English Close Listening

TOOLS: Articulate Storyline / Audacity / Adobe Illustrator / Javascript

This Storyline object recreates a successful classroom-based listening practice exercise focussing on listening to short scripts, in order to bring cognitive awareness to micro-listening functions.

The difficulty was in finding a way to enable rapid development of Storyline objects to accommodate many different scripts, but a solution was found using True / False Storyline objects. Now many such items can be produced in relatively short time scales.

Examiner Training

TOOLS: Articulate Rise / Audacity / Articulate Storyline

A successful face-to-face Examiner Trainer course needed to be converted to a blended learning solution. Articulate Rise was the most suitable for this, as it allowed a mixed-media approach to the input sessions, while the discussions could be held over Zoom or Teams.

This short extract showcases how Rise was used as a vehicle to convey some of the text-heavy material using text, visuals and audio as well as interactive elements.

Phonemic Trainer

TOOLS: Articulate Storyline / Audacity

This tool was produced to help language test examiners to discuss Pronunciation. It was found that many of the examiners did not know the meaning of the IPA symbols used to discuss Pronunciation of standard English.

This simple Storyline object was created by cutting up sound files using Audacity to exemplify the phonetic sound and example word containing that phoneme, as well as highlighting the syllable containing the sound in the example word.

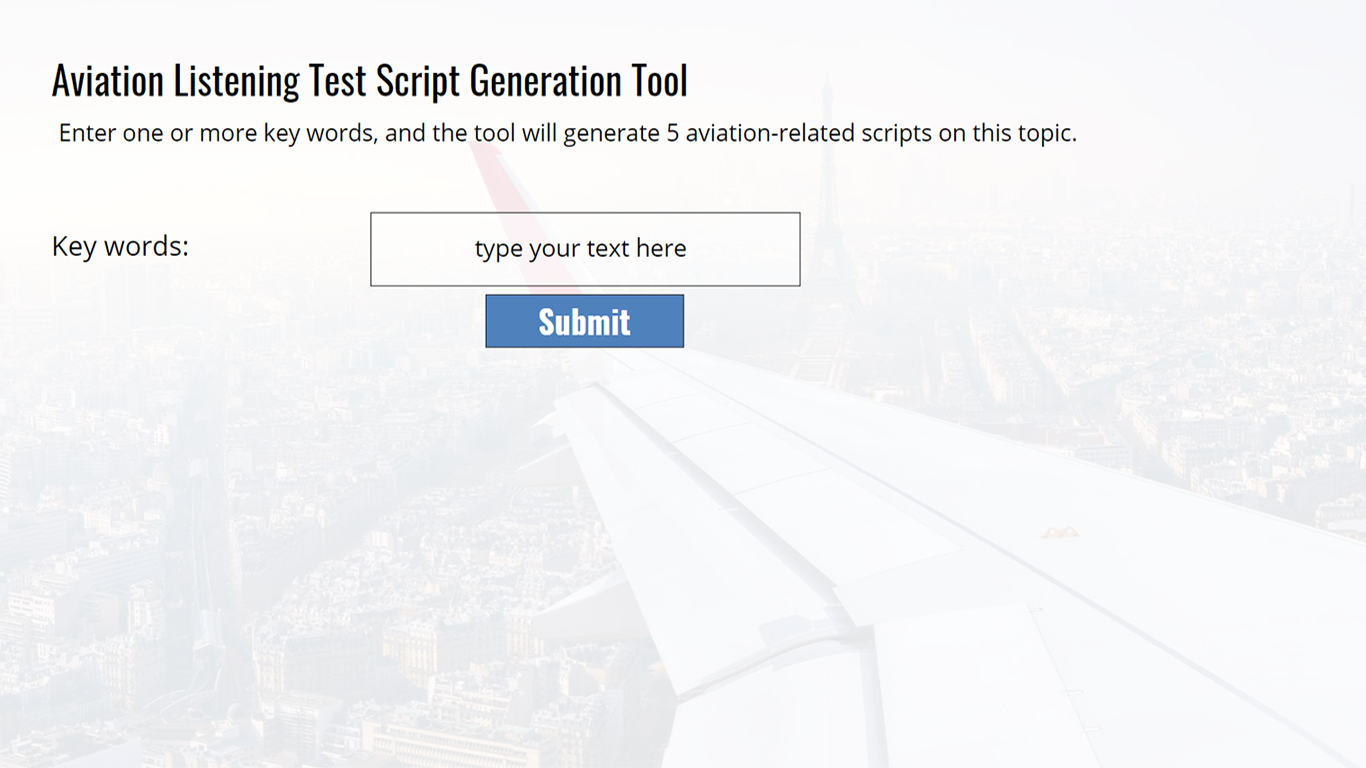

Test Script Generator

TOOLS: Chat GPT / Articulate Storyline

Subject Matter Experts are asked to generate scripts that can be used to produce test audio recordings, but often complain that they lack inspiration.

This simple tool utilizes the Chat GPT API with a prompt that converts key words (e.g. “thunderstorm” / “runway problems”) into 5 aviation-related scripts which the SMEs can either use or edit as they see fit to make them suitable for conversion. This enables increased speed in test item production.